From Golden Paths to Agentic AI: A New Era of Kubernetes Management

“And what is the Golden Path? you ask. It is the survival of humankind, nothing more nor less.”

– Leto II, the God Emperor of Dune, Frank Herbert (1981)

Introduction

In Frank Herbert‘s Children of Dune, the Golden Path is a vision of survival, a framework for guiding humanity through chaos, rescuing it from stagnation and ultimate extinction. With his spice-induced prescience, Leto II Atreides perceives countless paths leading to extinction and only one that secures the species’ survival. This path is marked by sacrifice, foresight, and unwavering discipline, chosen for necessity because no other path ensures survival.

The Golden Path concept has transcended its literary origins, inspiring disciplines far removed from Arrakis’ desert sands. In software engineering, it has come to symbolize structured and supported approaches that guide teams through the labyrinth of choices (tools, frameworks, patterns, operational complexities) inherent in building and managing modern technology stacks. These paths illuminate a clear route forward, freeing teams to focus on creation rather than navigation.

Golden paths are essential in Kubernetes, a platform as complex and dynamic as Herbert’s universe. They offer opinionated, well-curated workflows optimized for efficiency and consistency, yet flexible enough for those willing to venture beyond and bear the cost of experimentation. Amid the sprawling complexity of Kubernetes configurations, these paths provide clarity and enable teams to move quickly and confidently, reduce friction, and accelerate delivery.

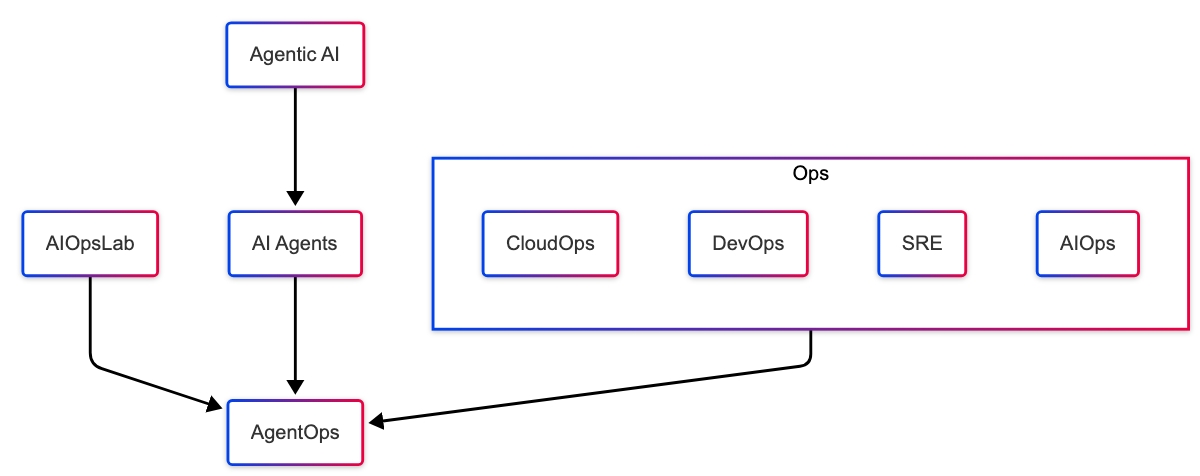

However, static Golden Paths can only take organizations so far in an increasingly dynamic and unpredictable operational landscape. The transition to Agentic AI becomes critical. Unlike static workflows, AI-driven systems can continuously adapt to evolving conditions. Agentic AI enables Kubernetes workflows to follow predefined best practices and learn, adapt, and execute decisions in real-time. By integrating AI into the operational fabric, organizations can move from reactive operations to proactive, self-healing systems that optimize resource utilization, prevent outages, and improve overall system resilience.

Kubernetes Operators have automated key workflows through reactive reconciliation loops, but the future goes further. With AI-powered reconciliation, these workflows become adaptive, self-healing, and aware of real-time data. For example, an AI-driven agent could analyze workload trends, automatically scale resources ahead of traffic spikes, detect anomalous behaviour, and initiate corrective actions without human intervention.

Much like Leto II’s vision, the Golden Path in Kubernetes must now expand to Agentic AI, where operational excellence becomes dynamic, proactive, and continuously improving. This blog explores the evolution of Golden Paths, how they have shaped DevOps practices manifest in Kubernetes, and how AI agents define the next generation of cloud-native operations. Building on the principles of DevOps with AI Agents laid out in our ‘DevOps Handbook with AI Agents: Transforming Automation and Efficiency,’ we examine how these intelligent agents are revolutionizing foundational DevOps practices, ushering in a new era of efficiency and innovation within Kubernetes environments.

We’ll put the Butlerian Jihad aside for now. There is no need to declare war on our infrastructure just yet!

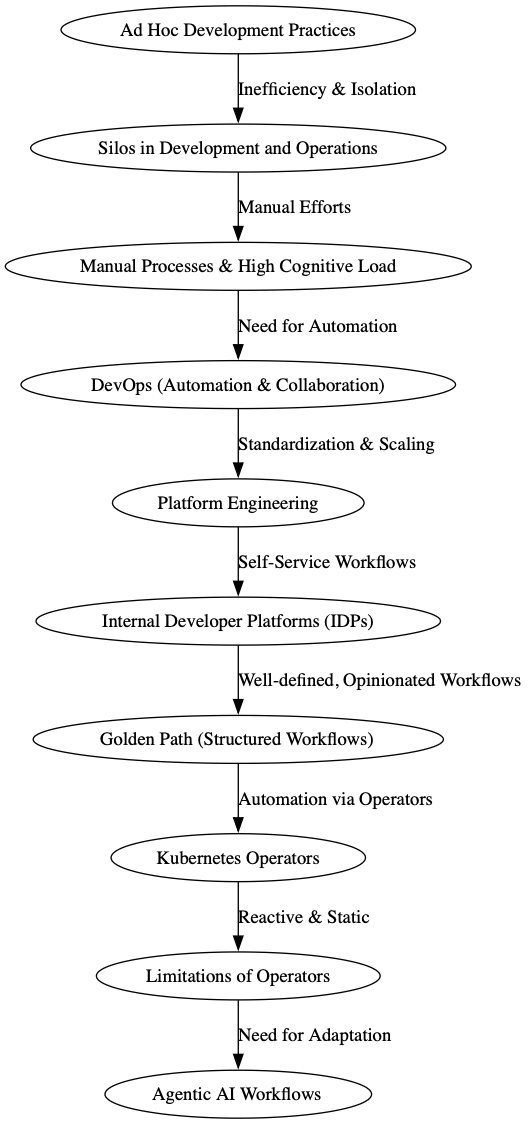

The Evolution of Golden Paths

The concept of golden paths in software engineering didn’t emerge overnight. It was forged through years of trial and error and the relentless pursuit of operational excellence. What began as informal best practices has evolved into structured, opinionated frameworks that streamline development and operations. This journey reflects the broader evolution of DevOps and platform engineering, where the goal has always been to balance innovation with stability.

Origins in DevOps

In the early days of DevOps, engineering teams operated in fragmented ecosystems (silos) filled with diverse tools, frameworks, and deployment strategies. While offering flexibility, this landscape often led to “rumour-driven development,”[1] an environment where knowledge was tribal, scattered, and inconsistently applied. Developers faced high cognitive loads, spending more time deciding and debating which tools to use rather than building software.

The growing complexity of cloud-native infrastructure demanded more than ad hoc solutions. This challenge gave rise to the first generation of Golden Paths, with a creative name and an internal platform. These internal platforms were opinionated, well-supported workflows designed to eliminate guesswork and streamline software delivery. These Golden Paths began to offer a transparent and clear route for teams to follow by consolidating tooling, standardizing practices, and embedding best practices into workflows.

Platform Engineering and Self-Service

As cloud environments become more complex, standardization, scalability, and developer empowerment become more critical. Platform Engineering and Internal Developer Platforms (IDPs) played a transformative role in modern cloud-native development, further streamlining best practices.

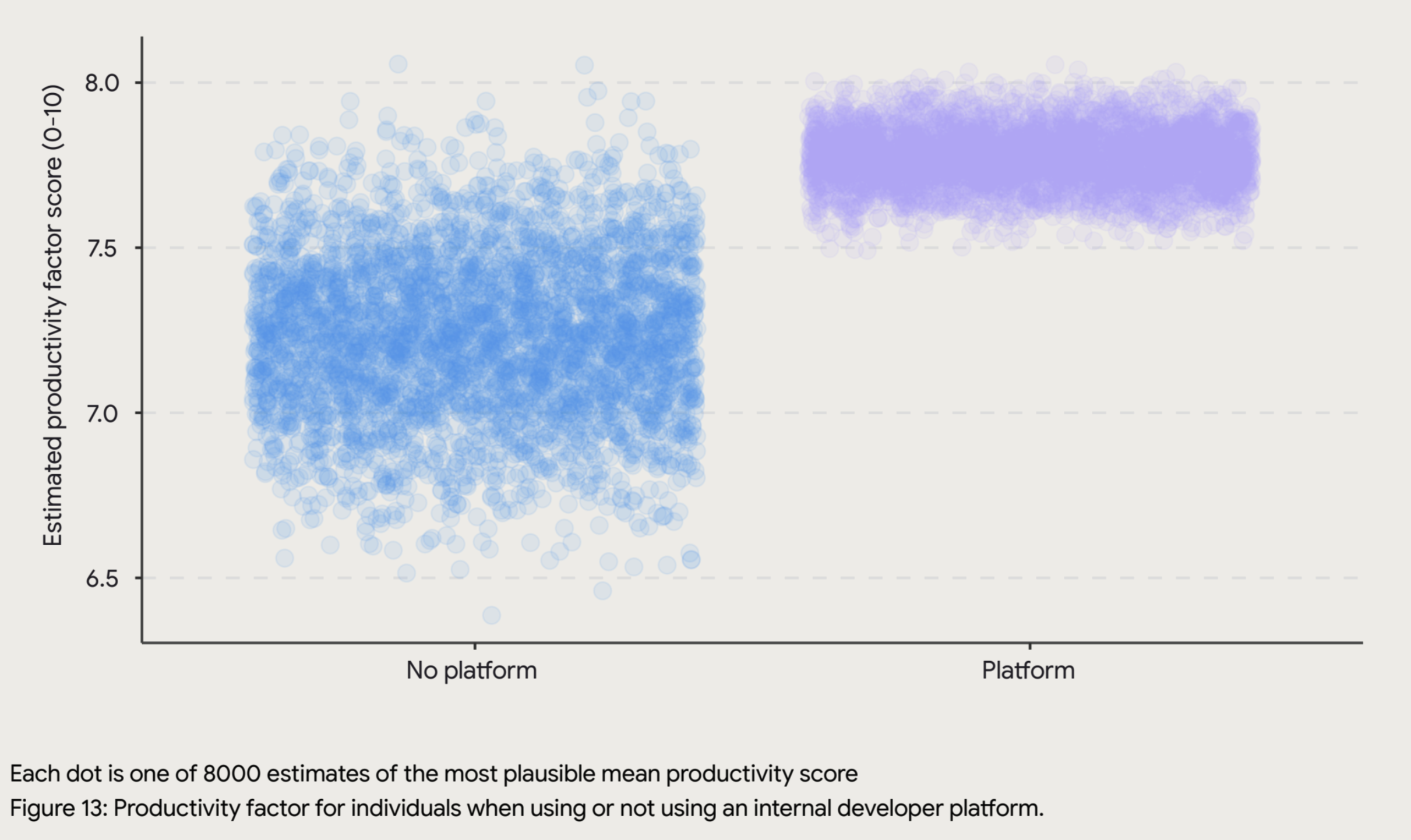

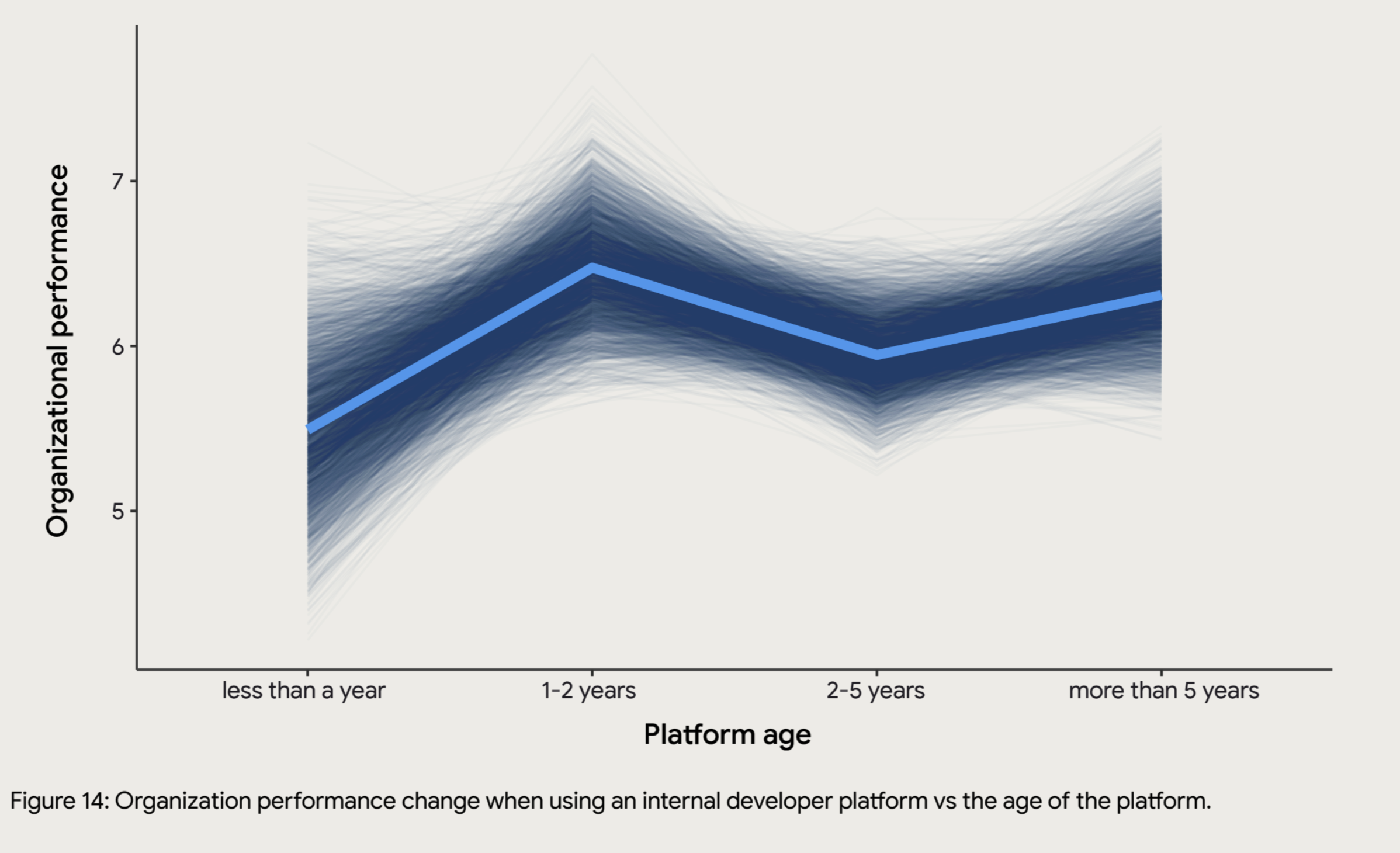

“Internal developer platform users had 8% higher levels of individual productivity and 10% higher levels of team performance. Additionally, an organization’s software delivery and operations performance increases 6% when using a platform.” [5]

What is Platform Engineering?

Platform engineering builds and maintains internal platforms that streamline software delivery and operations. These platforms offer opinionated, self-service workflows that abstract the underlying infrastructure complexity while maintaining flexibility for teams to innovate. The goal is to reduce developers’ cognitive load(and toil) and improve operational consistency without sacrificing agility.

Platform teams treat their platform as a product, evolving it based on feedback and operational needs. This product mindset ensures the platform continually addresses the pain points of developers and operations teams.

IDPs: The Backbone of Self-Service

An IDP is the backbone of modern platform engineering. Built by platform teams, an IDP integrates various tools and technologies into Golden Paths, which are well-defined, supported workflows that guide development teams from code to production.

Key Components of an IDP include [4]:

- Developer Portals: Centralized access to tools and templates.

- Service Catalogs: Reusable services promoting standardization.

- Infrastructure Orchestration: Automated resource provisioning.

- Deployment Automation: CI/CD pipelines enforcing security policies.

- Observability Tools: Integrated monitoring and logging.

IDPs empower developers by providing self-service capabilities, allowing them to:

- Provision Environments: Spin up fully configured environments on demand without relying on operations teams

- Deploy Applications: Use standardized deployment workflows to push code into production confidently.

- Access Golden Paths: Follow predefined workflows incorporating best practices, security guardrails, and compliance measures.

This self-service model significantly reduces bottlenecks and fosters a culture of ownership and accountability. Developers no longer need to navigate complex infrastructure or wait for manual approvals, enabling faster delivery and innovation. This self-service model boosts developer autonomy and productivity, aligning with data showing that IDP users experience higher productivity and better software delivery outcomes. (At least the productivity increase initially with IDPs)

“As with other transformations, the “j-curve” also applies to platform engineering, so productivity gains will stabilize through continuous improvement.“[5]

The Bridge to Agentic AI Workflows

While Platform Engineering and IDPs have streamlined infrastructure management, they remain reactive. Scaling, security enforcement, and incident response still rely on human intervention. Agentic AI bridges this gap by enabling self-healing systems and proactive resource management. By codifying Golden Paths into automated workflows, we can now leverage AI agents to:

- Identify and resolve incidents autonomously.

- Optimize resource usage based on workload patterns.

- Enforce security policies in real time.

This evolution from manual processes to AI-driven workflows in Kubernetes means evolving from supported workflows to self-healing, adaptive infrastructure powered by AI. Learn more about DevOps AI Agents for Kubernetes here.

Golden Paths in Action

Leading organizations have implemented Golden Paths to streamline operations:

- Spotify: Combating fragmentation with their platform, Backstage, which curates best practices and promotes self-service adoption[1].

- Google Cloud: Providing “templated compositions” for rapid development, abstracting away infrastructure complexities[2].

- VMware: Integrating Golden Paths into the Tanzu Platform, offering standardized workflows and ensuring secure, consistent Kubernetes deployments[3].

While these implementations have successfully streamlined operations, traditional Golden Paths remain static and reactive. As Kubernetes ecosystems scale and become increasingly complex, these paths must evolve to address challenges in real time. Manual workflows struggle to keep pace with dynamic infrastructure demands, security threats, and operational bottlenecks. To bridge this gap, organizations must transition from prescriptive workflows to adaptive, intelligent systems capable of autonomous decision-making and self-healing, ushering in the era of Agentic AI Workflows.

Kubernetes Operators vs. AI Agents

Nest thermostat is an automated system that implements a control loop.

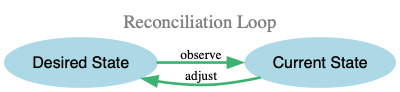

The control loop is a foundational concept at the core of automation systems. In the control loop system model, systems continuously monitor their state and adjust to maintain a desired outcome. This model is prevalent across various engineering fields (robotics, aviation, automotive, climate control), enabling machines and systems to self-correct and adapt to changing conditions.

A control loop typically consists of:

- Observation: Monitoring the current state of the system.

- Comparison: Evaluating the current state against the desired state.

- Action: Executing steps to align the system with the desired state.

- Repeat: Continuously repeating this process to maintain equilibrium.

In Kubernetes, the control loop is implemented as a reconciliation loop. Controllers and Operators use this loop to ensure that the cluster’s actual state matches the desired state defined by resource manifests.

Reconciliation Loop Process:

- Desired State: Defined through manifests (YAML/JSON) using Kubernetes resources like Deployments, Services, and ConfigMaps.

- Current State: This represents the actual state of the cluster that’s running now.

- Observation: Kubernetes controllers continuously observe the current state.

- Reconciliation: If the current state deviates from the desired state, the controller acts to reconcile them.

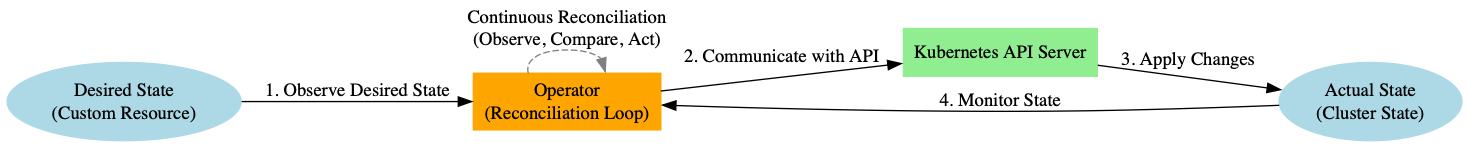

Operators extend Kubernetes controllers to manage the lifecycle of complex applications (Day-0/1/2 Operations) by automating Day-1 (installation, configuration) and Day-2 (scaling, upgrades, failover) tasks.[6] They encapsulate operational knowledge into Custom Resources (CRs) and continuously reconcile the desired state through the reconciliation loop.

Operators in Action:

- Desired State: Represents the user-defined state of the system, usually through Kubernetes Custom Resources.

- Operator: This operator continuously observes the desired state and ensures that the actual state matches it by interacting with the Kubernetes API.

- Kubernetes API Server: Applies changes to the cluster based on the operator’s instructions.

- Actual State: Reflects the real-time state of the cluster.

However, Operators are inherently reactive. They only respond when discrepancies arise and operate within predefined, static logic. This limitation hinders their ability to adapt to real-time environmental changes or evolving operational demands.

Limitations of Operators:

- Reactive by Design: Operators act only after state drift is detected.

- Static Logic: Hardcoded workflows limit flexibility in dynamic environments.

- Manual Updates: Updating reconciliation logic requires redeployments.

AI Agents: Beyond Operators

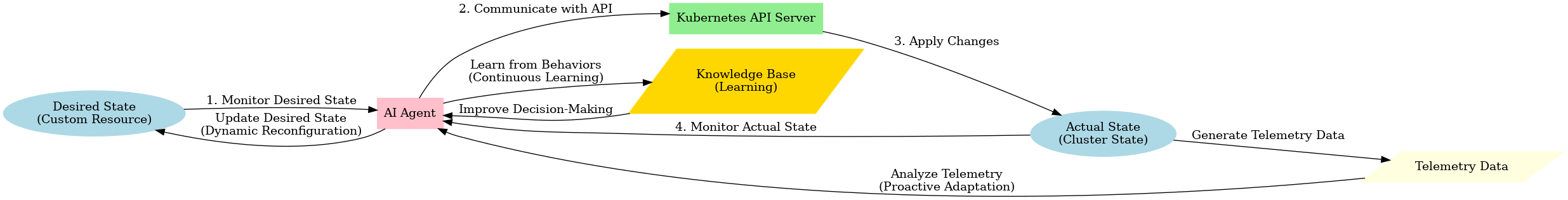

Agentic AI externalizes the reconciliation loop and shifts it to the AI agents, enabling them to manage Kubernetes environments proactively. These agents use real-time telemetry and predictive analytics to detect issues and make dynamic, context-aware decisions.

Advantages of AI Agents:

- Proactive Adaptation: AI agents predict and mitigate issues before they occur.

- Dynamic Reconfiguration: Real-time decision-making adjusts configurations instantly.

- Continuous Learning: AI agents evolve by learning from historical and live data.

This externalized approach transcends the reactive nature of Operators, allowing Kubernetes to become a self-healing, self-optimizing platform and increase the autonomy in decision-making:

- Operators act after detecting a state drift, addressing issues only after they arise.

- AI Agents proactively prevent state drifts by predicting issues and making preemptive adjustments, enabling a self-healing Kubernetes environment.

| Feature | Kubernetes Operators | AI Agents |

| Control Mechanism | Reactive Reconciliation Loop | Proactive, Predictive Decision Engine |

| Adaptability | Static Logic, Manual Updates | Continuous Learning, Dynamic Adaptation |

| Decision-Making | Event-Driven | Data-Driven, Predictive |

| Response Time | Post-Event Reaction | Pre-Event Prevention |

| Update Process | Manual Redeployment | Autonomous Learning and Updating |

Table 1: A basic summary acknowledging that AI Agnets could be deployed as Operators.

The Path Toward AI-Enhanced Golden Paths

Today, Golden Paths are evolving beyond static workflows into intelligent, AI-driven systems. These systems can:

- Detect and resolve issues in real time, reducing downtime.

- Optimize resource allocation by analyzing workload patterns.

- Continuously adapt based on feedback and changing infrastructure needs.

As DevOps transitioned from tribal knowledge to Golden Paths, the next leap is from static best practices to Agentic AI Workflows, where AI agents drive the reconciliation process, harnessing data from across the cluster to make proactive decisions.

Traditional Kubernetes Golden Paths

Traditional Kubernetes Golden Paths comprise several interconnected components that standardize and streamline cloud-native application development, deployment, and operation. These components embody DevOps best practices, fostering consistency, efficiency, and scalability across teams. The Paths simplifies the complexity of managing cloud-native applications by combining standardized templates, self-service tools, automated workflows, and integrated security. These components create a consistent, secure, and efficient path from development to production, empowering teams to innovate confidently.

Standardized Templates

Definition: Golden Paths relies on reusable templates to enforce consistency when deploying and managing Kubernetes resources.

Key Elements:

- Helm Charts: Pre-packaged Kubernetes applications with configurable parameters.

- Terraform/Other IaC Modules: Infrastructure-as-code (IaC) templates for provisioning cloud resources.

- YAML Manifests: Standardized Kubernetes resource definitions (e.g., Deployments, Services, Ingress).

Benefits:

- Reduces setup time for new services.

- Embeds security and compliance policies.

- Promotes consistency across development teams.

Example: A standardized Helm chart for deploying microservices might automatically configure resource requests/limits and include sidecars for logging and metrics collection, reducing misconfigurations and accelerating deployment cycles.

Tooling and Documentation

Definition: Developer-friendly tools and comprehensive documentation are integral to guiding teams through established workflows.

Key Elements:

- IDPs: Platforms like Backstage aggregate tools, documentation, and templates into a self-service hub.

- Service Catalogs: Centralized lists of approved services and reusable components.

- Step-by-Step Tutorials: Interactive guides for everyday tasks, from setting up CI/CD pipelines to deploying Kubernetes workloads.

Benefits:

- Reduces onboarding time.

- Encourages adoption of best practices.

- Minimizes reliance on tribal knowledge.

Example: A Backstage plugin provides a “Create New Service” button that auto-generates scaffolding code, Kubernetes manifests, and CI/CD pipelines.

Integrated Workflows

Definition: Integrated CI/CD pipelines and monitoring tools, automating the development path to production.

Key Elements:

- CI/CD Pipelines: Automated workflows for building, testing, and deploying applications (e.g., Jenkins, ArgoCD).

- Policy Guardrails: Tools like OPA Gatekeeper and Kyverno enforce security and compliance during deployments.

- Observability: Integrated logging, monitoring, and tracing tools (e.g., Prometheus, Grafana, Loki) for proactive issue detection.

Benefits:

- Reduces deployment risks through automation and policy enforcement.

- Enhances system reliability with built-in observability.

- Accelerates feedback loops between development and operations.

Example: A deployment pipeline automatically scans Kubernetes manifests for security misconfigurations using Kubescape before approving deployments.

Security and Compliance Automation

Definition: Built-in security and compliance controls are embedded in every deployment lifecycle stage.

Key Elements:

- Role-Based Access Control (RBAC): Enforced permissions to secure Kubernetes resources.

- Image Scanning: Tools like Trivy or Aqua Security scan container images for vulnerabilities.

- Secrets Management: Integration with tools like HashiCorp Vault for securely managing sensitive data.

Benefits:

- Minimizes security risks.

- Ensures compliance with industry standards (e.g., SOC 2, HIPAA).

- Automates incident detection and response.

Example: All Helm charts include pre-configured sidecars for secure secret injection via Vault, eliminating hard-coded credentials.

Environment Management

Definition: On-demand, fully provisioned environments for development, testing, and production.

Key Elements:

- Namespace Isolation: Automated creation of Kubernetes namespaces for isolated environments.

- Dynamic Resource Provisioning: Infrastructure scaling based on workload demands.

- Cost Optimization: Integration with FinOps tools like OpenCost for resource monitoring and cost control.

Benefits:

- Developers can provision environments without operational bottlenecks.

- Reduces idle resource costs through automated environment decommissioning.

- Accelerates testing and feature delivery.

Example: Developers use a self-service portal to spin up isolated preview environments for feature branches, which are automatically destroyed after testing.

The Leap to Agentic AI Workflows

As Kubernetes ecosystems expand in scale and complexity, the need for intelligent, self-sustaining systems becomes paramount. Traditional Golden Paths, while effective, remain static and dependent on human intervention. The next evolution is the rise of Agentic AI Workflows, dynamic, autonomous systems capable of proactive decision-making, self-healing, and continuous optimization.

Unlike traditional automation, which executes predefined scripts, Agentic AI leverages real-time data, machine learning models, and feedback loops to make context-aware decisions. Static configurations do not bind these intelligent workflows; they continuously adapt to workload changes, security threats, and operational inefficiencies. This proactive adaptability sets Agentic AI apart, enabling Kubernetes environments to become self-healing, self-optimizing, and resilient at scale.

Traditional Workflows:

- Rely on predefined scripts and manual oversight.

- React to issues after they occur.

- Requires significant human involvement to scale and adapt.

Agentic AI Workflows:

- Proactively detect and mitigate issues before they impact performance.

- Autonomously scale resources based on predictive analysis.

- Continuously adapt to changing workloads without manual input.

Example: An Agentic AI system predicts a surge in user traffic and scales Kubernetes pods in advance, preventing latency and service degradation.

Key Agentic AI Capabilities in Kubernetes

- Anomaly Detection and Self-Healing

- AI models analyze real-time telemetry (logs, metrics, traces) to detect abnormal behaviour.

- Autonomous remediation actions (e.g., restarting failed pods, adjusting network policies).

- Predictive Autoscaling

- ML models predict workload spikes and scale deployments ahead of demand.

- Combines resource optimization with cost efficiency.

- Intelligent Cost Optimization (FinOps)

- Identifies underutilized resources and optimizes infrastructure spending.

- Integrates with tools like OpenCost for real-time financial insights.

- Security Threat Detection

- Continuously scans for vulnerabilities and compliance issues.

- Triggers policy updates or network segmentation in response to threats.

Example Use Case: Agentic AI for Resource Optimization

Scenario: A Kubernetes cluster supporting an e-commerce platform faces unpredictable traffic during sales events.

Traditional Response:

- Set conservative resource limits.

- Manual scaling or fixed autoscaling policies

- Risk of latency spikes or service outages

- Rely on horizontal pod autoscaling triggered by CPU/memory usage.

Agentic AI Response:

- Predicts traffic surges based on historical data and marketing schedules.

- Autonomously scales deployments before demand peaks.

- Monitors costs and right-sizes resources post-event.

Outcome:

- Zero downtime during high-traffic events.

- Optimized resource usage, reducing operational costs.

- Faster recovery from anomalies without human intervention.

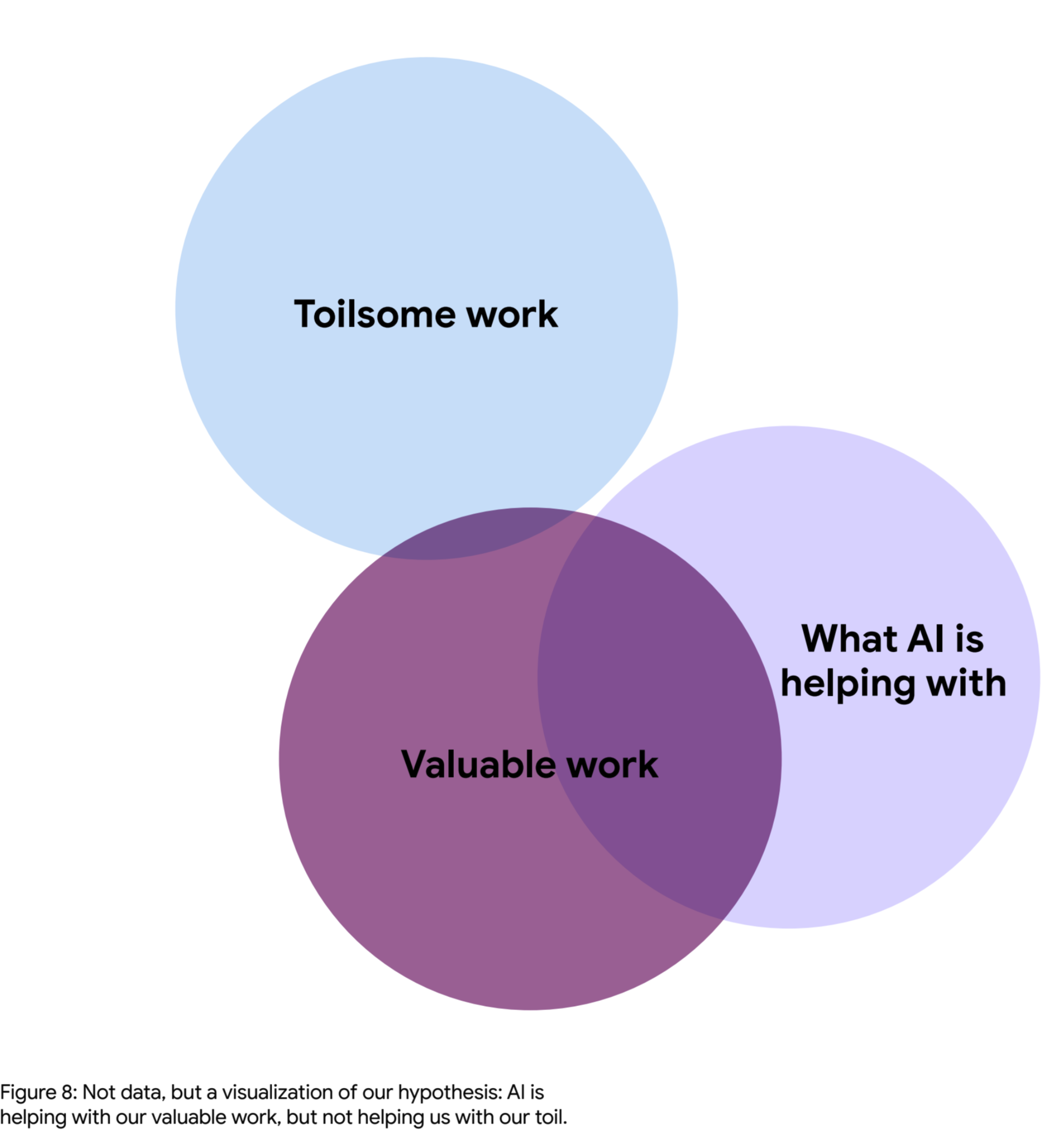

Benefits of AI-Driven Golden Path

“While AI is making the tasks people consider valuable easier and faster, it isn’t really helping with the tasks people don’t enjoy. That this is happening while toil and burnout remain unchanged, obstinate in the face of AI adoption, highlights that AI hasn’t cracked the code of helping us avoid the drudgery of meetings, bureaucracy, and many other toilsome tasks.” [5]

Agentic AI Kubernetes Golden Paths aims to improve toilsome work and optimize valuable work. Below, we explore the key benefits of adopting these advanced workflows.

Consistency and Reliability:

- Predictable Deployments: AI-enforced best practices reduce misconfigurations.

- Self-Healing Systems: Automatic anomaly detection and rollback minimize downtime.

- Compliance Automation: Proactive security scanning and policy enforcement.

Scalability:

- Dynamic Resource Management: Predictive scaling ensures performance without over-provisioning.

- Optimized Performance: The system continuously refines resource usage.

- Global Deployment Readiness: Adapt seamlessly across multi-cloud, hybrid, or edge environments.

Developer Empowerment:

- Self-Service Capabilities: Provisioning and deploying without ops bottlenecks.

- Reduced Toil: AI automates routine or repetitive tasks, letting teams focus on innovation.

- Faster Time-to-Market: Constant intelligence reduces risk and shortens feedback loops.

Designing AI-Enhanced Golden Paths

The journey to AI-driven infrastructure requires planning, clear goals, and iterative development.

Defining Clear Goals:

- Identify Automation Opportunities: Focus on repetitive, high-impact tasks for automation.

- Align with Business Objectives: Ensure AI workflows support operational and strategic goals.

Integrating AI Agents:

- Modular Integration: Use pluggable AI models for flexibility.

- Observability-Driven Learning: Feed monitoring data into AI models for continuous improvement.

- Policy Integration: Use OPA Gatekeeper or Kyverno as guardrails to ensure AI agents act within compliance boundaries.

Iterating with Feedback:

- Feedback Loops: Continuously refine AI models with operational data.

- A/B Testing: Safely test AI decisions before full rollout.

- User Feedback: Involve developers and operators in evaluating workflow effectiveness.

The Critical Role of DORA Metrics in Measuring DevOps Success

“DORA’s four keys have been used to measure the throughput and stability of software changes. This includes changes of any kind, including changes to configuration and changes to code.” [5]

Quantifying success has always been a complex challenge in software delivery and operations. Teams often rely on anecdotal evidence or intuition to assess performance without clear, objective measures, leading to fragmented improvements and inconsistent results. This is where the DevOps Research and Assessment (DORA) metrics emerge as a cornerstone for modern engineering teams. Developed through rigorous research and analysis of high-performing technology organizations, DORA metrics provide a standardized framework for measuring software delivery pipelines’ efficiency, reliability, and stability.

The DORA metrics consist of four key indicators, each designed to capture a critical aspect of software delivery performance:

- Deployment Frequency (DF) measures how often a team successfully deploys code to production. A high DF signifies a streamlined, efficient development process in which new features, bug fixes, and updates are released rapidly and reliably.

- Lead Time for Changes (LT) measures the time for a code change to move from commit to deployment in production. Shorter lead times reflect an optimized pipeline in which ideas can quickly be transformed into customer value.

- Change Failure Rate (CFR): This metric tracks the percentage of deployments that lead to failures in production. Lower change failure rates indicate robust testing, effective deployment strategies, and resilient systems.

- Mean Time to Recovery (MTTR) measures the average time it takes to recover from a production failure. A lower MTTR suggests that teams have effective incident response processes and systems for resilience and rapid recovery.

Why DORA Metrics Matter in AI-Driven Workflows

Incorporating Agentic AI into Kubernetes operations is not merely about automation. It’s about creating intelligent systems that continuously adapt and improve. However, without precise metrics to measure the impact of these AI-driven workflows, progress cannot be quantified, and further investments in automation cannot be justified. This is where DORA metrics provide immense value.

By monitoring these metrics, organizations gain a data-driven understanding of how AI agents influence software delivery performance. For example:

- Deployment Frequency: AI agents can automate deployment pipelines, identify bottlenecks, and optimize CI/CD workflows, resulting in more frequent and reliable deployments.

- Lead Time for Changes: Predictive analytics and intelligent automation reduce manual interventions, speeding up the path from code commit to production release.

- Change Failure Rate: AI agents can proactively detect potential failures through anomaly detection, enabling preventive measures and reducing the likelihood of failed deployments.

- Mean Time to Recovery: In the event of a failure, AI agents can initiate automated recovery actions, such as rolling back faulty releases or scaling affected services, drastically reducing recovery time.

Aligning AI-Enhanced Workflows with Business Outcomes

The value of DORA metrics extends beyond technical performance and is directly linked to business outcomes. High deployment frequency and low lead time accelerate time-to-market, giving organizations a competitive edge. A reduced change failure rate enhances product quality and customer trust, while a low MTTR minimizes downtime costs and preserves brand reputation.

Organizations create a continuous improvement cycle by embedding DORA metrics into the feedback loops of AI-enhanced Golden Paths. AI agents act on real-time data and measure their impact, refining workflows to maximize efficiency and resilience. This alignment ensures that every improvement is purposeful, measurable, and aligned with strategic goals.

DORA metrics are the vital compass guiding organizations through the evolving landscape of AI-driven DevOps. They transform abstract performance concepts into actionable insights, empowering teams to make informed decisions, demonstrate value, and drive sustained excellence in Kubernetes operations.

Challenges and Future Directions

While AI-enhanced Golden Paths offer significant advantages, they also introduce new challenges that require careful management.

Balancing Automation and Control:

- Risk of Over-Automation: Excessive automation may lead to unintended consequences.

- Mitigation: Implement human-in-the-loop models and approval workflows for high-impact actions.

Evolving with Kubernetes:

- Rapid Ecosystem Changes: Kubernetes evolves quickly, and AI models must adapt to new APIs and tools.

- Mitigation: Adopt flexible, modular architectures that allow seamless updates.

Long-Term Vision:

- Ethical AI in Operations: Ensure transparency, fairness, and accountability in AI-driven decisions.

- Adaptive Learning Systems: Develop AI models that self-improve without introducing drift or bias.

- Cross-Platform Autonomy: Extend AI workflows across cloud providers and edge computing environments.

Conclusion

Integrating Agentic AI into Kubernetes Golden Paths marks a pivotal shift from static best practices to dynamic, autonomous workflows. Operators laid the groundwork for reactive reconciliation, but the next evolution externalizes that loop into AI agents capable of real-time decision-making. Measured through DORA metrics, these advanced paths foster significant improvements in efficiency, reliability, and speed. Organizations can achieve unprecedented efficiency, resilience, and scalability by embedding intelligence into Kubernetes workflows.

Like Leto II’s vision, pursuing an AI-driven Golden Path is necessary in today’s cloud-native landscape. Teams can focus on meaningful work while systems heal, optimize, and secure themselves autonomously. This is the dawn of Agentic AI in Kubernetes, a future defined by proactive, living workflows that adapt to your organization’s ever-changing needs.

The Golden Path has evolved. Will your team evolve with it?

Appendix

Definitions of Different Paths

It often feels like in software, we have a path derangement syndrome or PDS.

Golden Path: An opinionated, well-supported, task-specific workflow promoting best practices in software development and operations. Golden Paths guide teams to efficient, consistent, and scalable solutions while allowing flexibility for innovation.

DevOps: A CI/CD pipeline that automates code deployment with integrated security checks and performance monitoring.

Garden Path: A seemingly straightforward route that can mislead, trick, or seduce users or developers into making incorrect assumptions, often resulting in confusion or errors. In UX design, a garden path can refer to misleading navigation or feedback loops.

DevOps: Overly simplified deployment scripts that hide critical configuration details, leading to production errors.

Happy Path: The default, ideal scenario where everything functions as expected without encountering errors. It focuses on ensuring that the primary workflow succeeds smoothly and efficiently.

DevOps: A seamless deployment of a microservice with all dependencies resolved and services running correctly.

Critical Path: The sequence of dependent tasks in a project that determines the minimum time needed to complete it. Delays in any critical path task will delay the entire project. Critical path also refers to essential parts of a function critical to its execution.

DevOps: Kubernetes cluster upgrades where skipping node patches could delay feature rollouts.

Silver Path: A flexible alternative to the Golden Path that balances standardization with customization. It allows for deviations where necessary while still adhering to best practices.

DevOps: Customizing Helm charts while following company-approved deployment standards.

Paved Road: Similar to the Golden Path, but typically emphasizes infrastructure support. It provides pre-built, scalable solutions that reduce complexity and streamline development workflows.

DevOps: A fully managed Kubernetes environment with observability tools pre-integrated.

Desire Path: An organically formed path created by repeated user behaviour, reflecting preferred or more efficient workflows. User analytics often identify desired paths, which may inform future design improvements.

DevOps: Developers consistently use a specific CLI tool, prompting official support and documentation.

Unhappy Path: In these scenarios, errors occur, or unexpected inputs are introduced, leading to alternate flows or failure states. Testing these paths ensures resilience and robustness.

DevOps: Testing infrastructure failure scenarios with chaos engineering tools.

Dark Path: A path intentionally hidden or obscure, often used in security to limit exposure or access to sensitive systems. It can also refer to deceptive user experiences that lead users to unintended outcomes.

DevOps: Internal scripts for emergency shutdowns known only to senior engineers.

Shiny Path: An attractive but ultimately inefficient or suboptimal workflow. It entices users with appealing features but lacks depth or scalability.

DevOps: Adopting new cloud services without understanding long-term costs or maintenance.

Rocky Path: A challenging, error-prone workflow requiring significant effort and expertise. Often, the result is poor tooling or lack of standardization.

DevOps: Manually managing server configurations without automation.

Forked Path: This is a decision point where multiple viable workflows diverge, offering different advantages and trade-offs. It requires thoughtful consideration to choose the most suitable direction.

DevOps: Choosing between Kubernetes Operators or Helm for deployment management.

Idealized DevOps Process Evolution

References

[1] Spotify: How We Use Golden Paths to Solve Fragmentation in Our Software Ecosystem.

Spotify explains how Golden Paths are used to address fragmentation in their engineering ecosystem. Their platform, Backstage, creates standardized workflows that guide teams and promote best practices.

[2] Google Cloud: Light the Way Ahead: Platform Engineering, Golden Paths, and the Power of Self-Service.

This article highlights how Golden Paths are implemented in Google Cloud’s platform engineering to simplify infrastructure, accelerate development, and ensure consistency across teams.

[3] VMware: What Is a Golden Path?.

VMware describes how Golden Paths are integrated into the Tanzu Platform to provide standardized workflows for secure and efficient Kubernetes deployments.

[4] Internal Developer Platform: The Role of Internal Developer Platforms in Modern Software Development.

Explains how Internal Developer Platforms (IDPs) leverage Golden Paths to streamline infrastructure management, reduce complexity, and support developer self-service workflows.

[5] DORA Report: Get the DORA Accelerate State of DevOps Report.

This report outlines the key metrics used to measure DevOps performance, such as Deployment Frequency and Change Failure Rate, and connects them to practices like Golden Paths.

[6] Kubernetes Operators: Kubernetes Operators Explained.

Provides an overview of Kubernetes Operators and their role in automating reconciliation workflows and managing the lifecycle of complex applications.