Rethinking Agency: Exploring Agency Reversal in Art and Agentic AI

Introduction

What happens when machines start making decisions for us? The shifting balance of control between humans and AI systems challenges our understanding of autonomy and agency.

An agency is a multidimensional construct that reflects an entity’s capacity to act independently, make decisions, and influence its environment. This capacity exists on a spectrum, ranging from minimal to substantial to complete levels of agency. Agency often shifts gradually along this spectrum, like a child growing into adulthood and gaining more agency. When agency shifts along the spectrum within a system between entities, a new phenomenon emerges: agency reversal.

Agency reversal describes the transformative process in which entities within a system exchange agency. This shift gradually transitions from minimal influence to behaviours that resemble intentional, goal-directed action. For example, the progression from realistic paintings to Impressionist works reflects a transition in which the viewer’s interpretive role becomes increasingly active, eventually leading to agency reversal. The viewer is no longer passively consuming art but engaging actively with the artwork, interpreting colours and forms to extract meaning. Similarly, we see a shift in software systems from humans performing all tasks to deploying AI agents that take on greater autonomy and influence. Eventually, the agency is entirely handed off to AI agents, resulting in agency reversal.

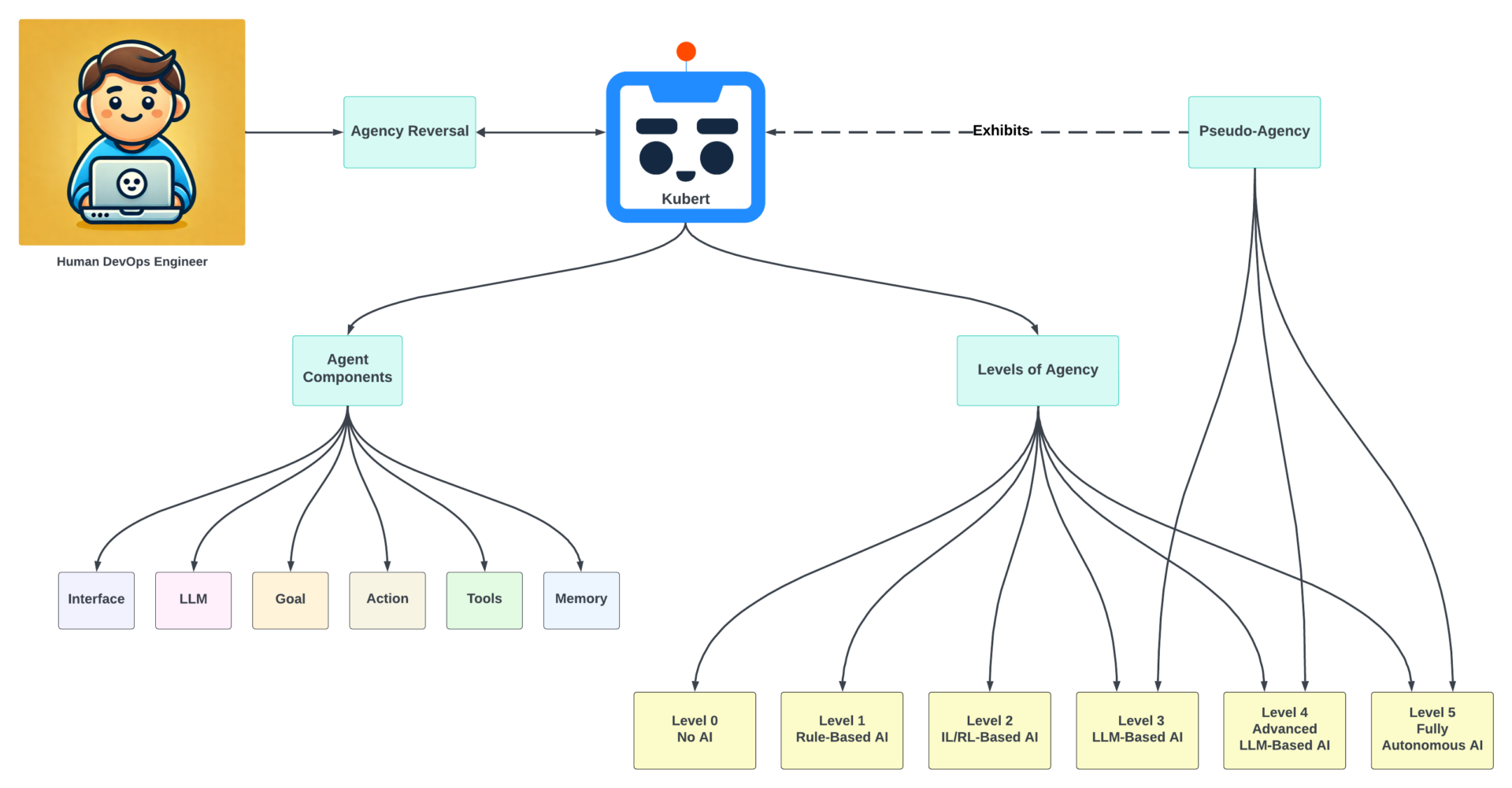

Though Agentic AI systems lack true psychological intentionality or consciousness, their observable patterns of interaction naturally lead humans, who are sensitive to agency, to perceive them as agent-like. However, it is critical to recognize that this perception stems from simulated behaviours rather than genuine autonomy. We have termed this concept pseudo-agency.

Pseudo-agency describes AI systems that appear autonomous through simulated decision-making but lack genuine intentionality and consciousness. This illusion can lead users to overestimate their capabilities. For instance, Kubert DevOps AI Agents execute tasks, make autonomous decisions, and reinforce the perception of intentionality. These systems operate without true consciousness yet perform goal-oriented actions, creating the illusion of autonomy. Kubert operates within Kubernetes environments by automating scaling, deploying, and managing resources, gradually transitioning from passive tools to systems with pseudo-agency.

Agency in Art: From Passive Spectatorship to Active Interpretation

Historically, art was perceived as a medium of fixed meaning, a view rooted in traditions such as French academic style painting or academicism.

Alexandre Cabanel – The Birth of Venus

In this model, viewers played a passive role, receiving the artist’s message as a clear, rule-bound transmission akin to the precision of cabinet-making. However, movements like Impressionism disrupted this paradigm. Impressionists invited viewers to interpret incomplete scenes and emotionally resonate with the work by prioritizing light, emotion, and fleeting moments. For instance, Claude Monet’s Impression, Sunrise (1872) foregrounds fleeting light and colour, demanding active engagement to fill in its nebulous contours.

Claude Monet – Impression, Sunrise

This shift reached an apex with Cubism, where artists like Pablo Picasso deconstructed perspective, compelling viewers to assemble fragmented forms into cohesive wholes. This progression reflects a position on the agency spectrum, highlighting how viewers transitioned from passive receivers of fixed meanings to active interpreters. Picasso’s Les Demoiselles d’Avignon (1907) exemplifies this agency reversal, as its angular forms and abstracted figures challenge viewers to interpret its fractured reality.

Pablo Picasso – Les Demoiselles d’Avignon

This trend continued with Abstract Expressionism, where interpretation became even more subjective.

But, this might stretch our analogy too far and make it difficult to relate to Agentic AI.

The exchange of agency from painter to viewer is gradual. The painter emphasizes the viewer’s participation by moving away from a realistic replication of reality. The viewer has no choice but to accept this new level of agency to interpret and enjoy the new creation. The evolution of viewer agency in art offers a compelling parallel to transforming user roles in AI systems. This shift is most evident in the rise of Agentic AI in DevOps environments.

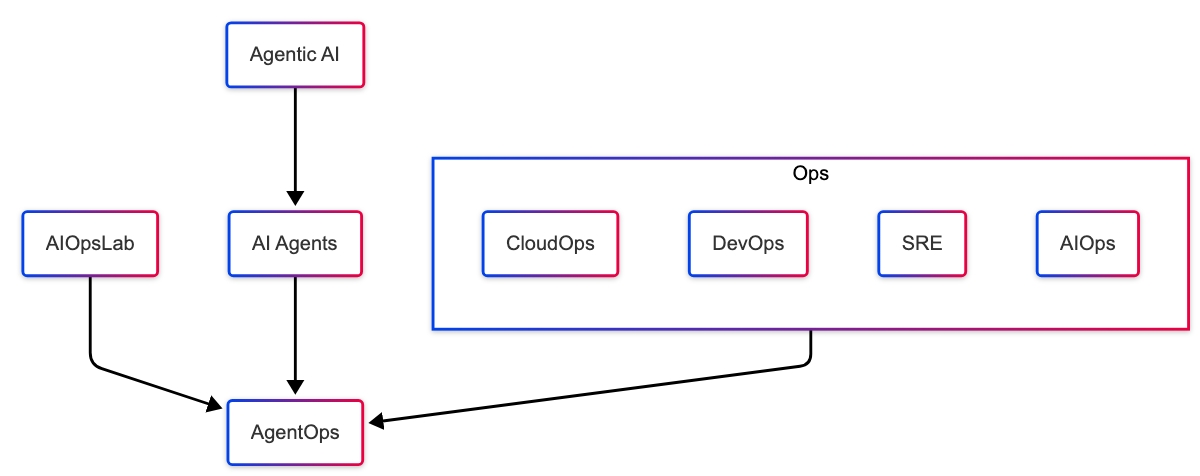

Agentic AI: From DevOps to AI Agents

With the adoption of Agentic AI, the traditional DevOps automation tools are being replaced by autonomous, decision-making AI agents. This transition mirrors the agency reversal observed in art, where systems initially designed to execute human commands are now evolving, with the aid of AI agents, to make autonomous decisions and adapt to dynamic environments.

Kubert DevOps AI Agents exemplify this shift. Initially, DevOps systems operated under strict user control, executing predefined scripts and workflows. However, Kubert introduces a form of pseudo-agency in which the AI agent autonomously scales resources, optimizes deployments, and diagnoses issues without constant human oversight.

This evolution aligns with the levels of AI agency:

- Level 0: Passive tools executing scripted actions. Ex. Prefined scripts.

- Level 1-2: Tools with conditional automation and adaptive learning. Ex. Automated scaling based on thresholds.

- Level 3-4: AI agents capable of proactive decision-making and goal-setting. Ex. Predictive scaling and anomaly detection.

- Level 5: Fully autonomous agents managing complex systems with minimal human input. Ex. Fully autonomous system optimization.

As AI agents advance, the systems transition from human-operated to AI-operated. The increase in the level of AI agency in the AI agents results in a reversal of agency, with humans increasingly supervising rather than directly controlling operations. This shift has profound implications for system design, accountability, and human oversight.

Conclusion

Exploring agency reversal across art and Agentic AI systems reveals a shared trajectory: a gradual but profound shift in who holds interpretive and operational power. Art’s movement from realism to abstraction empowered viewers to engage in meaning-making actively. Similarly, AI systems like Kubert DevOps AI Agents are transitioning from passive tools to autonomous entities that influence and optimize complex systems.

Recognizing this shift allows us to understand better the dynamics of emerging technologies and their integration into human workflows. While these systems do not possess genuine autonomy, their pseudo-agency challenges us to reconsider concepts of control, responsibility, and collaboration between humans and machines.

As AI systems continue to evolve, the question is no longer whether they will act autonomously but how we will define our role alongside them.

References

[1] Agency (philosophy) – https://en.wikipedia.org/wiki/Agency_(philosophy)

[2] Agency (sociology) – https://en.wikipedia.org/wiki/Agency_(sociology)

[3] Agency (psychology) – https://en.wikipedia.org/wiki/Agency_(psychology)

[4] An Interactive Agent Foundation Model. – https://arxiv.org/abs/2402.05929

[5] Intelligent Agent – https://en.wikipedia.org/wiki/Intelligent_agent

[6] Action Theory (sociology) – https://en.wikipedia.org/wiki/Action_theory_(sociology)

[7] Sense of Agency – https://en.wikipedia.org/wiki/Sense_of_agency