Building a Custom AI Agent for SQL Server: DevOps Practices with Terraform, Kubernetes, and Google Cloud

Managing Kubernetes deployments and ensuring seamless interactions with external databases like SQL Server can be daunting in modern cloud environments. AI-driven automation through platforms like Kubert offers a streamlined approach, reducing manual effort and minimizing the risk of errors, thereby enhancing operational efficiency and reliability.

This blog will explore DevOps practices for deploying a custom AI Agent that interacts with a Microsoft SQL Server database hosted on Google Cloud.

This blog is the first in a series that explores how to build and deploy a custom AI Agent for SQL Server in Kubert. This post focuses on the DevOps practices necessary for setting up and maintaining the infrastructure using Terraform, Terragrunt, and Google Cloud Build. We’ll cover everything from infrastructure as code (IaC) to automating database creation and data import. The following blog will deeply dive into the AI Agent, explaining how it processes user prompts, generates SQL queries, and interacts with the database.

The code used in this blog is available in the blog-support GitHub repository, where you can find all the necessary scripts and configurations.

Overview of the Custom AI Agent

The custom AI Agent in Kubert is designed to process user prompts, convert them into SQL queries, and interact with an external SQL Server database. The agent operates within a Kubernetes cluster, leveraging a secure proxy to communicate with the Cloud SQL instance on Google Cloud.

Here’s how it works:

- User Prompt: The process begins when a user prompts the SQL Server AI Agent. This prompt could be a simple query like “List all orders shipped to the UK” or a more complex request involving multiple conditions.

- SQL Server AI Agent: The AI Agent processes the user prompt using its LLM, translating it into an SQL query. This query is executed on the SQL Server instance hosted in Google Cloud SQL.

- Secure Proxy: Before executing the query, it passes through a Secure Proxy, ensuring a secure connection between the AI Agent and the SQL Server database. This proxy plays a crucial role in protecting sensitive data and maintaining the integrity of the connection.

- Google Cloud SQL (SQL Server): The Secure Proxy forwards the SQL query to the SQL Server instance, where it is processed. The results are then sent back through the same secure channel.

- Results Delivery: The SQL Server AI Agent receives and presents the query results to the user through the Interface. This feedback loop allows the user to see the outcome of their query in real-time.

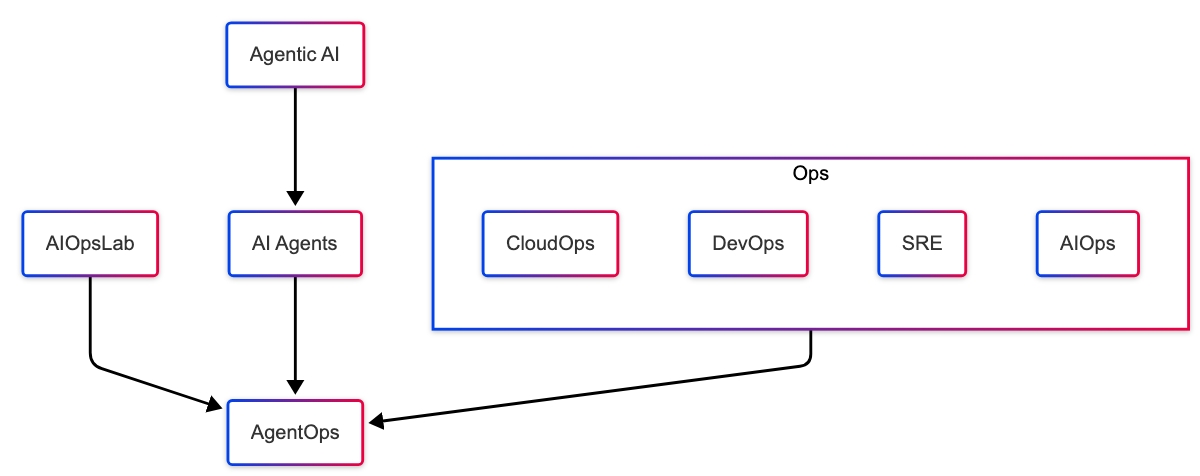

AI Agent DevOps

Deploying a custom AI Agent in a Kubernetes environment involves several essential DevOps practices, focusing strongly on GitOps and IaC. GitOps ensures that all configurations and infrastructure definitions are stored in version control, allowing for automated, consistent, and reproducible deployments. IaC allows us to define and manage infrastructure using code, making infrastructure provisioning more efficient, scalable, and reliable.

GitOps

GitOps plays a crucial role in our deployment process by enabling automated and consistent management of infrastructure and application configurations. With Git as the single source of truth, any changes to the infrastructure are tracked, reviewed, and applied automatically using CI/CD pipelines. For instance, we use Atlantis as our GitOps tool, which automates Terraform operations by responding to pull requests. This ensures that infrastructure changes are reviewed, approved, and applied consistently across all environments.

At Kubert, we define GitOps using this quick formula.

Terraform: This is the Kubert team tool for implementing IaC in GitOps. Terraform’s declarative approach allows teams to define infrastructure consistently and repeatedly.

Bitbucket Pull Request: Bitbucket is the platform for managing git repositories, where infrastructure changes are proposed, reviewed, and approved through Pull Requests. This adds a layer of collaboration and governance to the GitOps process.

Atlantis: Atlantis automates Terraform operations by responding to pull requests. When a PR is opened or updated, Atlantis can automatically plan and apply Terraform changes, streamlining the CI/CD aspect of GitOps.

IaC with Terraform + TerraGrunt

At the core of our deployment strategy is IaC using Terraform, enhanced by Terragrunt. This approach allows us to define all aspects of our infrastructure, from cloud resources to Kubernetes configurations, in a declarative manner, with added benefits like managing multiple environments and reusing configurations efficiently with Terragrunt. The Terraform and Terragrunt configuration files in the blog-support GitHub repository provide the blueprints for deploying and managing the resources on Google Cloud.

Terragrunt Project Structure

Before diving into the details of Terraform configurations, it’s essential to understand the project structure when using Terragrunt. Terragrunt is a tool that acts as a thin wrapper for Terraform, adding features like environment management, keeping your Terraform code DRY (Don’t Repeat Yourself), and handling dependencies between Terraform modules. Below is the project structure that utilizes Terragrunt to manage infrastructure across different environments:

. ├── environments │ ├── env.hcl │ ├── prod │ │ └── deploy │ │ └── terragrunt.hcl │ └── terragrunt.hcl └── infra └── deploy ├── README.md ├── app.tf ├── data.tf ├── main.tf ├── outputs.tf └── variable.tf

environments/ Directory

This directory contains all the Terragrunt-managed environment-specific configurations. It allows easy infrastructure management and deployment across different environments like development, staging, and production.

- env.hcl: This file contains environment-level variables and configurations shared across different environments. It is typically included in other terragrunt.hcl files to avoid repetition.

- prod/ Directory:

- deploy/:

- This directory contains the environment-specific terragrunt.hcl file for the production environment. The file references the Terraform modules defined in the infra/ directory and applies environment-specific variables.

- terragrunt.hcl: This file configures the production deployment. It includes environment-specific variables, references the Terraform code in the infra/deploy/ directory, and manages dependencies.

- deploy/:

- terragrunt.hcl (in the environments/ root): This file defines configurations or settings that apply globally across all environments.

infra/ Directory

This directory contains the Terraform configurations that define the infrastructure. The code here is reusable across multiple environments, thanks to Terragrunt.

- deploy/ Directory:

- main.tf: The main entry point for your Terraform configuration is where the core infrastructure components (like Cloud SQL instances) are defined.

- app.tf: Contains Terraform code that defines application-specific infrastructure, such as cloud storage buckets, service accounts, and Kubernetes secrets.

- data.tf: Defines data sources used by the Terraform configuration to pull in existing infrastructure details.

- outputs.tf: Specifies the outputs of the Terraform module, which might be used by other modules or by Terragrunt in other configurations.

- variable.tf: Contains variable declarations for the Terraform module. These variables are overridden by Terragrunt using environment-specific values.

How It All Works Together

- Reusable Infrastructure Code: The Terraform code in the infra/ directory defines reusable infrastructure components. It is written once and can be applied without duplication to multiple environments (e.g., dev, prod).

- Environment-Specific Customizations: The environments/ directory houses environment-specific Terragrunt configurations. These files reference the Terraform modules and inject environment-specific variables. For example, the production environment might use different resource sizes or security settings than a development environment.

- Consistency Across Environments: Using Terragrunt to manage your Terraform code ensures consistency across environments. Each environment can have its own set of configurations, but the underlying infrastructure definitions remain the same. Multiple environments minimize errors and allow for easier management of complex, multi-environment setups.

- GitOps and CI/CD: This structure is well-suited for a GitOps workflow. All configurations are version-controlled in Git, and infrastructure changes are managed via pull requests. CI/CD pipelines can automatically apply these changes to the appropriate environment, ensuring a consistent and repeatable deployment.

Terraform Resources

Resource File – main.tf

The main.tf file is the heart of your Terraform configuration, defining the primary resources required to deploy and manage a Cloud SQL instance in Google Cloud. It leverages Terraform’s ability to integrate with Google Cloud services, manage secrets securely, and automate infrastructure provisioning. Each resource is carefully configured to ensure security, efficiency, and reliability, making it a robust solution for managing databases in the cloud. Below is a detailed breakdown of the key components within this file.

1. Retrieving Secrets from Google Secret Manager

The security team creates and manages secrets in the Secret Manager before deploying the infrastructure.

data "google_secret_manager_secret_version" "assistant_password" { project = var.project_id secret = var.cloud_sql_instance_sql_users.secret_name } data "google_secret_manager_secret_version" "root_password" { project = var.project_id secret = var.cloud_sql_instance_sql_users.root_secret_name }

This data block retrieves the secret passwords of the assistant user and root user from the Secret Manager. Externalizing sensitive information like passwords to Secret Manager enhances security and prevents secrets from being hardcoded in the Terraform configurations. However, it’s important to note that Terraform will store the retrieved secrets in its state file, which could be a potential security issue. Since Terraform state files can contain sensitive data, it’s crucial to ensure that the state is securely stored and access is tightly controlled to mitigate the risk of exposing sensitive information. One way to mitigate this risk is to encrypt the Terraform state. Other solutions that involve injection of the secrets at runtime at the time of need would require restructuring this Terrafrom implementation.

2. Generating a Unique Cloud SQL Instance Name

resource "random_id" "name" { byte_length = 4 }

This resource generates a random ID appended to the Cloud SQL instance name, ensuring that the instance name is unique across different environments or deployments. The variable byte_length determines the length of the generated random ID. Incorporating a random string into resource names provides the benefit of uniqueness, which helps avoid naming collisions in shared environments.

3. Defining the Cloud SQL Database Instance

resource "google_sql_database_instance" "northwind" { project = var.project_id region = var.region name = "${var.cloud_sql_instance_name_prefix}-${random_id.name.hex}" database_version = var.cloud_sql_instance_engine root_password = data.google_secret_manager_secret_version.root_password.secret_data settings { tier = var.cloud_sql_instance_machine_type disk_autoresize = var.cloud_sql_instance_disk_autoresize disk_size = var.cloud_sql_instance_disk_size disk_type = var.cloud_sql_instance_disk_type ip_configuration { ipv4_enabled = false private_network = data.terraform_remote_state.vpc.outputs.vpc_id } backup_configuration { enabled = var.cloud_sql_instance_backup_enabled start_time = var.cloud_sql_instance_backup_start_time } maintenance_window { day = var.cloud_sql_instance_maintenance_window_day hour = var.cloud_sql_instance_maintenance_window_hour update_track = var.cloud_sql_instance_maintenance_track } } deletion_protection = var.cloud_sql_instance_deletion_protection timeouts { create = var.cloud_sql_instance_resource_timeout delete = var.cloud_sql_instance_resource_timeout update = var.cloud_sql_instance_resource_timeout } }

This resource block defines the Cloud SQL database instance. The instance is configured using several variables that determine its name, region, database version, and root password.

- The variable database_version specifies the type of database and the version. In this deployment, we are deploying SQL Server, and the version is set to SQLSERVER_2019_STANDARD in the Terragrunt configuration.

- The settings block configures various aspects of the Cloud SQL instance, including machine type, disk settings, IP configuration, backup settings, and maintenance windows.

- The tier configuration parameter is critical to optimize for cost and quality of service. The tier specifies the machine type for the SQL instance, and you can choose a pre-defined machine type or a custom one (e.g., db-custom-2-3840, with 2 CPU and 4G RAM).

- The private_network specifies the VPC network for private IP access, ensuring secure communication within the Google Cloud environment. Here, we use VPC, defined in the cloud foundation IaC.

- Creating a Google Cloud SQL instance can take several minutes. Having the timeouts defined can save you time rerunning the process when it times out and possibly dealing with some cleanup.

4. Creating the Database within the Cloud SQL Instance

resource "google_sql_database" "northwind" { name = var.cloud_sql_instance_database_name instance = google_sql_database_instance.northwind.name timeouts { create = var.cloud_sql_instance_resource_timeout delete = var.cloud_sql_instance_resource_timeout update = var.cloud_sql_instance_resource_timeout } }

This resource creates a database within the Cloud SQL instance. It uses the instance name from the previously defined SQL instance resource and applies the same custom timeouts.

5. Defining the SQL User for the Cloud SQL Instance

resource "google_sql_user" "assistant_user" { instance = google_sql_database_instance.northwind.name name = var.cloud_sql_instance_sql_users.username password = data.google_secret_manager_secret_version.assistant_password.secret_data type = "BUILT_IN" timeouts { create = var.cloud_sql_instance_resource_timeout delete = var.cloud_sql_instance_resource_timeout update = var.cloud_sql_instance_resource_timeout } }

This resource creates a single SQL user within the Cloud SQL instance. The user’s credentials are securely retrieved from Google Secret Manager, and the user is granted access to the database.

Resource File – app.tf

The app.tf file handles multiple essential tasks, focusing on creating and managing cloud resources essential for operating your custom AI Agent in Kubert. These include creating a Google Cloud Storage (GCS) bucket with lifecycle rules, setting up a service account with necessary permissions, and storing sensitive data in Kubernetes secrets. Below is a detailed breakdown of the key components within this file.

1. Creating a Google Cloud Storage Bucket

resource "google_storage_bucket" "app_export_bucket" { name = var.kubert_assistant_app_bucket location = var.region force_destroy = true uniform_bucket_level_access = true versioning { enabled = false } lifecycle_rule { action { type = "Delete } condition { age = 3 matches_storage_class = ["STANDARD"] } } }

This resource defines a GCS bucket for exporting application data. The SQL Server AI Agent stores data exports and diagrams in the bucket. It includes several configurations to manage access, object lifecycle, and versioning. The lifecycle_rule implements a management policy that automatically deletes objects older than three days. This policy helps manage storage costs by cleaning up old, unnecessary data.

2. Creating a Service Account for Cloud SQL Proxy

module "sa" { source = "../../../../../../../modules/iam_service_account" project_id = var.project_id name = "cloudsql-application-sa" generate_key = true iam_project_roles = { "${var.project_id}" = [ "roles/cloudsql.client", "roles/cloudsql.instanceUser" ] } iam_storage_roles = { "${google_storage_bucket.app_export_bucket.name}" = ["roles/storage.objectAdmin"] } }

This module creates a service account for interacting with the Cloud SQL proxy and the GCS bucket. The service account is granted the necessary permissions to establish a connection to the database instance and permission to store files in the GCS bucket. The generate_key is set to true, and Terraform generates a private key for the service account, which will be used for authentication. This private key will be stored in the Terraform state, introducing potential security issues. There are several different ways to mitigate this issue. The Kubert team uses the GCP HashiCorp Vault plugin to manage authentication with Google Cloud and cloud secrets.

3. Storing the Service Account Key and Database Credentials in a Kubernetes Secret

resource "kubernetes_secret" "sa" { metadata { name = var.kube_database_secret namespace = data.terraform_remote_state.gke_man_after.outputs.kubert_assistant_namespace } data = { "credentials.json" = base64decode(module.sa.key.private_key) "db_user" = var.cloud_sql_instance_sql_users.username "db_pass" = data.google_secret_manager_secret_version.assistant_password.secret_data } }

This resource creates a Kubernetes secret that securely stores the service account’s private key and database credentials, making them accessible to applications running within the Kubernetes cluster. The secrets are exposed in the Terraform state and the Kubernetes secret. To further enhance security, it’s crucial to manage secrets dynamically using tools like HashiCorp Vault. By integrating Vault with Terraform and Kubernetes, we can ensure that secrets are injected at runtime and never stored in plaintext, significantly reducing the risk of exposure. Kubernetes secrets are base64 encoded and not encrypted.

4. Granting Public Read Access to the GCS Bucket

resource "google_storage_bucket_iam_member" "public_access" { bucket = google_storage_bucket.app_export_bucket.name role = "roles/storage.objectViewer" member = "allUsers" }

This resource grants public read access to the GCS bucket, allowing users to view the objects stored in the bucket. This public configuration is only for the demo. The SSO ties the user logged into the Kubert in production environments with the GCS bucket authorization.

Resource File – data.tf

The data.tf file in the Terraform configuration retrieves existing resources and state information on which the infrastructure depends. These data resources allow us to fetch data from other parts of your Google Cloud infrastructure, integrate with existing resources, and ensure that your Terraform configuration is consistent with the current state of your environment. Below is a detailed breakdown of the key components within this file.

1. Retrieving an Access Token for the Service Account

data "google_service_account_access_token" "default" { target_service_account = var.impersonate_account scopes = ["cloud-platform"] lifetime = "660s" }

This data block retrieves an access token for a Google Cloud service account that is being impersonated. This token is used to authenticate Terraform API requests made on behalf of the service account. Service account impersonation is a feature in Google Cloud that allows one service account (or user) to act as another service account. Impersonation helps delegate limited access and perform actions securely and efficiently in Google Cloud environments. When using CI/CD pipelines, impersonation allows the pipeline to securely perform actions (like deploying resources) without storing sensitive service account keys in the pipeline environment.

2. Retrieving Google Kubernetes Engine (GKE) Cluster Information

data "google_container_cluster" "cluster" { project = var.project_id name = var.cluster_name location = var.cluster_location }

This data block retrieves information about an existing Google Kubernetes Engine (GKE) cluster. Terraform uses this information to configure other resources interacting with the cluster, such as creating Kubernetes secret.

3. Retrieving VPC Information from Remote Terraform State

data "terraform_remote_state" "vpc" { backend = "gcs" config = { bucket = var.data_vpc_bucket prefix = var.data_vpc_bucket_prefix } }

This data block retrieves information about a Virtual Private Cloud (VPC) network from the remote Terraform state stored. The network team creates and verifies all VPCs to ensure security, adherence to organization policy, and reliability.

4. Retrieving GKE Management Information Post HashiCorp Vault Deployment

data "terraform_remote_state" "gke_man_after" { backend = "gcs" config = { bucket = var.data_gke_man_after_bucket prefix = var.data_gke_man_after_bucket_prefix } }

This data block retrieves information about the GKE management setup deployed after the HashiCorp Vault deployment. The GKE bootstrapping workflow installs HashiCorp Vault early to manage static and dynamic secrets and PKI for the rest of the tools installed.

Resource File – output.tf

The outputs.tf file in your Terraform configuration is designed to export essential information about the resources created during deployment. These outputs make it easier to reference critical infrastructure details in subsequent deployment pipeline stages, such as in Cloud Build processes or other CI/CD workflows.

Cloud Build Process: Creating the Database and Importing Data

With the infrastructure now provisioned using Terraform and Terragrunt, the next critical step is to automate the database setup and data import process. This is where Google Cloud Build comes into play, enabling a seamless CI/CD pipeline that integrates infrastructure management with application deployment.

This section explores how the Cloud Build process is configured to create the Cloud SQL database and import data. This process is essential for initializing the database with the necessary schema and ensuring your application can start with the required data.

Cloud Build Project Structure

The directory structure for the Cloud Build process provides an overview of the files involved in automating the database creation and data import. Each file plays a specific role in the build process, from configuration to data management. Below is a detailed breakdown of the directory structure:

. ├── README.md ├── add_role.sql ├── cloudbuild.yaml ├── import_data.sh └── nwnd.sql

The files in this directory work together to automate the process of setting up the database and importing initial data into your Google Cloud SQL instance:

- cloudbuild.yaml orchestrates the build process, defining the sequence of actions.

- import_data.sh executes the necessary commands to create the database and run SQL scripts.

- nwnd.sql and add_role.sql provide the SQL commands to create the database schema, populate it with data, and configure roles.

Cloud Build Configuration

The cloudbuild.yaml is the main configuration file for Cloud Build. It defines the steps Cloud Build will execute, including running scripts, importing data, and managing dependencies.

steps: - name: 'gcr.io/cloud-builders/gcloud' entrypoint: 'bash' args: - '-c' - | # Make the script executable chmod +x ./import_data.sh # Run the import_data.sh script with the required arguments ./import_data.sh \ "${_IMPORT_DATABASE_INSTANCE_NAME}" \ "${_IMPORT_DATABASE_INSTANCE_PROJECT}" \ "${_IMPORT_DATABASE_INSTANCE_REGION}" \ "tc-tekstack-kps-temp-data-import" \ "${_IMPORT_DATABASE_NAME}" \ "${_IMPORT_USERNAME}" secretEnv: ['DATABASE_PASS'] dir: 'sql_server_cloud_build_import/' options: logging: CLOUD_LOGGING_ONLY pool: name: projects/your-project/locations/your-location/workerPools/your-worker-pool availableSecrets: secretManager: - versionName: projects/$PROJECT_ID/secrets/${_DATABASE_PASSWORD_KEY}/versions/latest env: DATABASE_PASS

The build process performs a single step that executes a shell script. This script is responsible for creating and importing the data into the database. The script is executed with several arguments defining the operation’s specifics, such as the database instance name, project, region, and credentials. These credentials are retrieved from the Terraform outputs. The secretEnv configuration allows Cloud Build to securely pass the database password to the script by retrieving it from the Secret Manager. Ensuring the password is never exposed in plaintext during the build process. The availableSecrets block defines which secrets are available in the build steps. It specifies the secret’s location in Secret Manager and maps it to an environment variable (DATABASE_PASS). The configuration also defines private pools, dedicated pools of workers that offer greater customization over the built environment, including the ability to access resources in a private network. Like default pools, private pools are hosted and fully managed by Cloud Build and scale up and down to zero, with no infrastructure to set up, upgrade, or scale. Because private pools are customer-specific resources, you can configure them in more ways. We use private pools and workers with cost-effective configurations for these demo use cases. The builds are slower and less expensive.

Analysis of import_data.sh

The import_data.sh script is a Bash script designed to automate copying an SQL file to GCS, importing it into a Cloud SQL instance, executing a Data Definition Language (DDL) script, and cleaning up the temporary resources created during the process. Below is a detailed breakdown of the script’s key components and their purposes.

1. Installing sqlcmd and Tools

The cloud build step executes the script with a docker container created from the gcloud(gcr.io/cloud-builders/gcloud) image. The gcloud image is a basic image created to support the gcloud CLI use cases. We need to add additional libraries to support the SQL Server data import.

log "Installing sqlcmd and tools" curl https://packages.microsoft.com/keys/microsoft.asc | tee /etc/apt/trusted.gpg.d/microsoft.asc curl https://packages.microsoft.com/config/ubuntu/20.04/prod.list | tee /etc/apt/sources.list.d/mssql-release.list apt-get update ACCEPT_EULA=Y apt-get install -y msodbcsql18 mssql-tools18 unixodbc-dev echo 'export PATH="$PATH:/opt/mssql-tools18/bin"' >> ~/.bashrc source ~/.bashrc

This block installs the necessary SQL Server command-line tools (sqlcmd, msodbcsql18, etc.) required to interact with the SQL Server instance.

2. Creating a Temporary GCS Bucket

log "Creating temporary bucket $BUCKET_NAME" if gsutil ls -b gs://$BUCKET_NAME &>/dev/null; then log "Bucket $BUCKET_NAME already exists" else gsutil mb gs://$BUCKET_NAME || { log "Failed to create temporary bucket"; exit 1; } fi

This section checks if a temporary GCS bucket exists; if not, it creates one. This bucket stores the SQL file before importing it into the Cloud SQL instance.

3. Copying SQL File to GCS

log "Copying \"$SQL_FILE\" to gs://$BUCKET_NAME/sql/$SQL_FILE" if gsutil ls gs://$BUCKET_NAME/sql/$SQL_FILE &>/dev/null; then log "File $SQL_FILE already exists in the bucket" else gsutil cp "$SQL_FILE" gs://$BUCKET_NAME/sql/$SQL_FILE || { log "Failed to copy SQL file to GCS"; exit 1; } fi

The script copies the SQL file (nwnd.sql) to the temporary GCS bucket. If the file exists in the bucket, it logs that fact and skips the copy operation.

4. Fetching Service Account and Granting Permissions

log "Fetching service account for $INSTANCE_NAME" SERVICE_ACCOUNT=$(gcloud sql instances describe $INSTANCE_NAME --format="value(serviceAccountEmailAddress)") || { log "Failed to fetch service account"; exit 1; } log "Granting permissions to $SERVICE_ACCOUNT on gs://$BUCKET_NAME" gsutil iam ch serviceAccount:$SERVICE_ACCOUNT:objectAdmin gs://$BUCKET_NAME || { log "Failed to grant permissions"; exit 1; }

The script retrieves the service account associated with the SQL instance, which is necessary for granting access to the GCS bucket. The script then grants objectAdmin permissions to the service account on the GCS bucket, allowing it to access the SQL file for import.

5. Dropping and Creating the Database

log "Dropping or truncating the database $DATABASE_NAME" sqlcmd -S tcp:$INSTANCE_IP -U sqlserver -P "$DATABASE_PASS" -Q "DROP DATABASE IF EXISTS [$DATABASE_NAME]; CREATE DATABASE [$DATABASE_NAME];" -C || { log "Failed to drop or create database"; exit 1; }

Before importing new data, the script drops the existing database (if it exists) and recreates it. This ensures a clean environment for the data import.

6. Importing the SQL File to Cloud SQL

log "Importing $SQL_FILE to $INSTANCE_NAME" gcloud sql import sql $INSTANCE_NAME gs://$BUCKET_NAME/sql/$SQL_FILE --database=$DATABASE_NAME || { log "Failed to import SQL file to Cloud SQL"; exit 1; }

The script uses gcloud sql import command to import the SQL file from GCS into the Cloud SQL database. This step initializes the database with the schema and data defined in nwnd.sql.

7. Replacing Placeholders and Executing the DDL Script

log "Replacing placeholders in the DDL script" sed -i "s/{{DATABASE_NAME}}/$DATABASE_NAME/g" "$DDL_SCRIPT" sed -i "s/{{USERNAME}}/$USERNAME/g" "$DDL_SCRIPT" log "Executing the DDL script" sqlcmd -S tcp:$INSTANCE_IP -U sqlserver -P "$DATABASE_PASS" -d $DATABASE_NAME -i "$DDL_SCRIPT" -C || { log "Failed to execute DDL script"; exit 1; }

The script replaces placeholders in the add_role.sql DDL script with actual values (DATABASE_NAME and USERNAME). The DDL script is then executed to apply additional configurations, such as role creation or permission assignments, within the database.

8. Cleaning Up the Temporary Bucket

log "Deleting temporary bucket $BUCKET_NAME" gsutil rm -r gs://$BUCKET_NAME || { log "Failed to delete temporary bucket"; exit 1; }

After the import and setup are complete, the script deletes the temporary GCS bucket to clean up any resources that are no longer needed.

Conclusion

In this blog, we’ve taken a deep dive into the DevOps practices required to deploy a custom AI Agent for SQL Server in Kubert. By leveraging Terraform, Terragrunt, and Google Cloud Build, we’ve automated the infrastructure setup and database initialization, ensuring that our deployments are consistent, secure, and efficient. These practices streamline the deployment process and align with industry standards for cloud-native applications, ensuring your infrastructure is robust and scalable. This foundation is critical for supporting the AI Agent, which will be the focus of our next blog. We’ll explore how the agent processes user prompts, converts them into SQL queries, and interacts with the SQL Server database. Stay tuned for a closer look at the AI-driven side of this powerful integration.